Conference Location | Important Dates | Keynotes | Author information | Registration |

NEWS

Conference Images

Here you view the images from the conference.Conference Proceedings

Here you can access the proceedings:

Program out NOW!

Here you can download the booklet for the conference with the program, information for authors and all abstracts.DOWNLOAD BOOKLET

Conference Dinner

The conference dinner will take place at Karls Cafe, Katschhof 1, in the Museum Charlemagne from 18:00 - 22:00. You have the opportunity to visit the museum from 20:00 - 22:00. The permanent exhibition displays Aachen’s history from its foundation to today including the thermal baths of the Romans, Charlemagne’s Palace, and Napoleon’s chic spa town. More information can be found here: MUSEUMDemos at IHTA

We prepared demos at RWTH Aachen University (Institute of Hearing Technology and Acoustics + RWTH Virtual Reality and Immersive Visualization). The demos include most likely a tour through the CAVE and a tour behind the scenes, an audiometry for experts in our MobiLab and VR demos.Date: Tuesday, 20.06, 16:30-18:30

Where: Kopernikusstraße 5 + 6

How to participate: Please sign up for the demos at the registration desk on Tuedsay upon arrival due to limited availability.

How to get there: Staff will be waiting in front of the conference location SuperC at 16:15 and guide you to Kopernikusstraße 5. It is a short walk of 10 min duration. If you cannot walk, please let us know and we will organize a cab.

Aachen Cathedral © Stadt Aachen / Andreas Herrmann |

Conference Location: SuperC © Stadt Aachen / Peter Hinschläger |

Institute for Hearing Technology and Acoustics, IHTA © Martin Guski |

AUDICTIVE Conference 2023

With recent developments in hardware- and software technologies, audiovisual virtual reality (VR) has reached a high level of perceptual plausibility that overcomes the limitations of simple laboratory settings. Applying interactive VR technology is expected to help understand auditory cognition in complex audiovisual scenes that are close to real life, including acoustically adverse situations such as classrooms, open-plan offices, multi-party communication or outdoor scenarios with multiple (and moving) sound sources. In particular, VR enables controlled research on how acoustic and visual components and further contextual factors affect the ability to interact with the scene, e.g. freely moving in it.To best integrate research in auditory cognition and VR, it is required that aspects from the three scientific disciplines of acoustics, cognitive psychology, and virtual reality/computer science are addressed. The cooperation between researchers from these fields allows synergetic effects which cannot be achieved by a single discipline. The AUDICTIVE conference targets fundamental research across the three disciplines and addressing the three research priorities (a) "auditory cognition," (b) "interactive audiovisual virtual environments," and (c) "quality evaluation methods," the latter being located at the interface between (a) and (b). We invite submissions to the conference that are original proposals and contribute to fundamental research and interdisciplinary findings. This conference brings together researchers from different fields of acoustics, cognitive psychology, and virtual reality while also extending a warm welcome to related presentations coming from other fields. The theme of the conference can be addressed in three interrelated subthemes:

- 1. To what extent are recent theories of auditory cognition and related empirical findings applicable in a more life-like environment created by interactive audiovisual VR?

- 2. How can the realism and vibrancy of audiovisual virtual environments be brought to a higher level, both in terms of audiovisual representation as well as user interaction, based on the findings from auditory cognition research?

- 3. What are suitable and novel quality evaluation methods that enable the systematic and efficient assessment of VR systems (cf. b) in light of the targeted auditory cognition research (cf. a)?

Conference Location

The conference will be a leading event in the areas of acoustics, auditory cognition, and interactive virtual environments for research purposes. It is a great opportunity for participants to meet in person, to expand their knowledge, to share new techniques and competences.Conference Location: SuperC , RWTH Aachen University, Templergraben 57, 52062 Aachen

Welcome Drinks: 19 June 2023, 6 pm at the conference location SuperC , RWTH Aachen University, Templergraben 57, 52062 Aachen

Conference Dinner: 21 June 2023, 6 pm, Centre Charlemange - Karl's

Demo Tour: 20 June 2023, Institute for Hearing Technology and Acoustics , Kopernikusstraße 5, 52074 Aachen

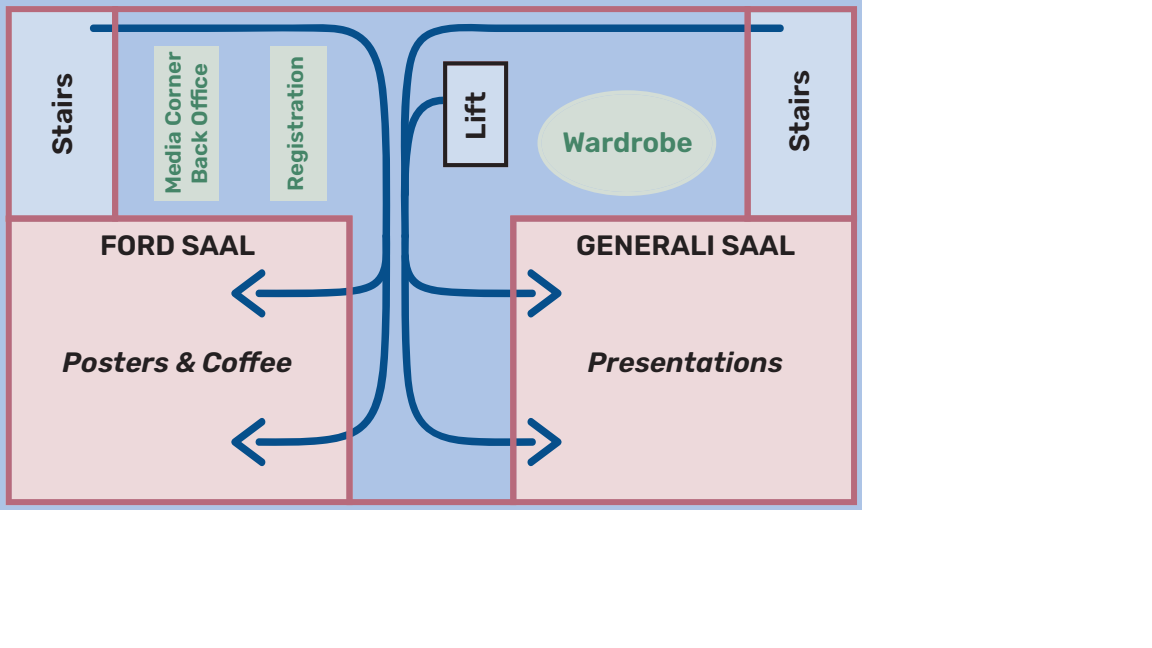

Floorplan:

go to top

Important Dates

- 09 January 2023: Registration and abstract submission opens

10 February 2023: Abstract submission deadline (max. 200 Words)- 17 February 2023: New abstract submission deadline!

- 28 February 2023: Notification of acceptance

28 April 2023: Final paper deadline- 5 May 2023: EXTENDED paper deadline

- 18 June 2023: Registration closes

- 19 June - 22 June 2023: Conference at SuperC, RWTH Aachen University

Keynotes

June 20: Barbara Shinn-Cunningham |

How peripheral and central factors together control auditory attention in complex settingsIn healthy, normal-hearing listeners, complex negotiations between volitional, top-down attention and involuntary, bottom-up attention allow you to focus on and understand whatever talker matters in a given moment— while also ensuring that you evaluate and respond to new sound sources around you. This talk explores how peripheral and central factors together determine how successful you will be when communicating in everyday environments with both expected and unexpected sounds, focusing especially on the cortical networks that mediate competition for attention. |

June 21: Frank Steinicke |

B(l)ending Natural and Artificial Intelligence and RealitiesThe fusion of extended reality (XR) and artificial intelligence (AI) will revolutionize human-computer interaction. XR/AI technologies and methods will enable scenarios with seamless transitions, interactions and transformations between real and virtual objects along the reality-virtuality continuum indistinguishable from corresponding real-world interactions. Yet, todays immersive technology is still decades away from the ultimate display. However, imperfections of the human perceptual, cognitive and motor system can be exploited to bend reality in such a way that compelling immersive experiences can be achieved. In this talk, we will review some XR illusions, which bring us closer to the ultimate blended intelligence and reality. |

June 22: Alexandra Bendixen |

Sensory prediction in auditory scene analysis, audiovisual interplay, and VR evaluationHow humans make sense of the complex auditory world around them, can be studied by creating scenes with multiple interpretations, and recording listeners’ scene perception over time. Using this approach, we investigate factors that stabilize auditory perception in ambiguous scenes, such as predictability of the acoustic input, and how the use of predictability may change with auditory aging. Many of our paradigms rely on subjective reports of listeners’ perception, and we continuously work on methods for verifying those reports based on physiological responses. Our recent combinations of auditory multistability with eyetracking have given us novel insights into the interplay of auditory and visual multistability, with implications for our general understanding of scene analysis across the senses. Most recently we have turned the psychophysiological measurement logic around and now use brain responses relating to sensory prediction to evaluate certain aspects of virtual reality (VR), such as the adequacy of VR latencies. |

go to top

Author information

Oral presentations

The duration for the presentations is 30 min (20 min presentation + 8 min discussion + 2 min change to the next presenter). A computer will be provided with PowerPoint and Adobe Acrobat. Loudspeaker will be provided as well. The conference language is English, so presentations should preferably be given in English, slides should be prepared in English as well. You have to upload your slides prior to the presentation, a laptop will be provided in the MEDIA CORNER.Poster Pitches + Poster Session

The duration of the poster pitches is 5 min (3 min pitch + 2 min change to next presenter). A computer will be provided with PowerPoint and Adobe Acrobat. Loudspeaker will be provided as well. The conference language is English, so pitches should preferably be given in English, the poster should be prepared in English as well. You have to upload your slides prior to the presentation, a laptop will be provided in the MEDIA CORNER. After the pitches, the poster session with coffee and snacks will follow immediately. We will provide poster boards with dimensions 196 cm x 96 cm (height x width). Please prepare your poster in DIN A0 portrait format. The conference language is English and the posters should definitely be in English.Sumbisson

Please, address your contribution to one of the following submission formats:- 1) If you are a member of the AUDICTIVE project: Oral presentations (20 min + 7 min Q & A) and short paper (2-4 pages).

- 2) If you are not a member of the AUDICTIVE project: Poster presentations and short paper (2-4 pages).

Templates (.tex and .docx) for the full paper submission can be downloaded here: DOWNLOAD

Conference Proceedings: All contributions will be published as Conference Proceedings of the Audicitve Conference 2023 via RWTH Publication incl. a DOI. Therefore, all corresponding authors must download the AUTHOR CONTRACT, sign it and upload it as an additional file in the submission system in the category "Author Contract".

go to top

Registration

Registration opens on 9 January 2023. In order to register for the conference, you have to create an account. After you have successfully created the account, you can login with your username and password and register as a participant and submit an abstract. Please follow this link: CREATE ACCOUNTThe conference is free of charge and includes refreshments during the day and the conference dinner. In order for an accepted submission to be included in the conference program and proceedings, at least one of the submissions’ authors has to register and present the poster at the conference.

go to top